From small sensor modules to artificial intelligence – there are different technologies and image processing algorithms to allows drones to see. Each application has different requirements for the vision system and is dependent on the available space within the drone and the required image quality. Dr. Frederik Schönebeck of FRAMOS spoke at the VDI conference on “Civil Drones in Industrial Use” and described the criteria for applications in mapping, object recognition and navigation as well as the relevance of artificial intelligence for drones.

A vision system is a vision system and, in most cases, consists of an image sensor, a processor module, a lens mount and a lens. For drones, the vision system must be particularly small and lightweight. It must have little impact on the flight performance. In addition, high power efficiency and low heat generation are important as there is usually only a limited power supply on board and any draw on this power supply affects the distances the drones can fly. For pure image and video streams, where the drone does not derive any further actions based on the scene, are called non-functional applications. Functional applications, on the other hand, use these images from the vision system to navigate for collision avoidance or to initiate tracking-based follow-up actions. With the use of vision technology, functional drones are more intelligent and mostly autonomous. In both cases, the image quality must allow for an exact analysis and, for functional tasks, these images must be processed on-board, in real time. The combination of the criteria, that being the size, weight, efficiency, image quality and processing to name a few, is a great challenge, even for embedded vision applications, and thus requires a careful selection and combination of the individual components.

UAVs Place High Demands on Embedded Vision and Image Sensors

The most suitable image sensor for drones and embedded vision systems is a CMOS sensor. This new industry standard achieves higher speeds and better image quality than its CCD counterparts due to its architecture, while also being significantly smaller in size. Developers can choose between global shutter and rolling shutter read-outs depending on their application needs. With a Global Shutter (GS), all pixels are exposed simultaneously virtually freezing the motion in the image and thereby reducing the motion blur or distortion captured in the images. GS sensors are therefore suitable for taking pictures in high-speed, high motion applications. However, the complex pixel design results in larger pixels, thus larger sensor dimensions and more expensive per unit costs.

The rows of pixels in a rolling shutter (RS) are exposed one after the other, which can cause artifacts in the image during movement. The design of RS sensors is less complex, resulting in higher sensitivity while making them smaller and less expensive sensors compared to global shutters. Drone developers should consider carefully all the advantages and disadvantages these sensors provide when choosing one for their system.

“A larger sensor always entails using a larger lens and a larger overall system. This has an impact on weight, price and power consumption, all important characteristics when designing drones. The benefits and costs have to be precisely balanced against the needs of the application,” says Frederik Schönebeck.

Note: Motion artifacts caused by the rolling shutter can affect the SLAM algorithm and thus the pose estimation of a drone. These interferences can be minimized by the interaction of the image sensor with the data of an IMU (Inertial Measurement Unit) sensor. Fusing image data with IMU data has the additional advantage of providing better positioning information for the drone in three-dimensional space resulting in more stable and safer flight behavior. More can be read about this in the FRAMOS article “For Drones, Combining Vision Sensor and IMU Data Leads to More Robust Pose Estimation”.

Application Example: Mapping

Mapping applications use drones to create high-resolution maps. They usually fly over the area to be mapped at high altitudes. Vision systems for mapping drones must offer very high resolutions, with image quality being the most important criteria, sometimes even simultaneously in several frequency bands.

Large format sensors with resolutions from 50 to 150 megapixels and a >74dB dynamic range offer very good image quality and are particularly suitable for mapping applications.

Figure 1: Sensor formats and the associated image formats

A large sensor with a high resolution offers the advantage that a larger area can be recorded with one shot. This enables a faster overflight and more efficient surveying.

Figure 2: Recording area of different sensor resolutions with the same image quality

Figure 2: Recording area of different sensor resolutions with the same image quality

The drone maps the earth’s surface from high altitudes so the relative speed at which the drone moves over the earth is low thus the resulting motion artifacts in the image are minimal and can be completely eliminated by a mechanical shutter, if required. In many cases, a more economical rolling shutter sensor is enough for mapping drones. But the large sensors require large lens mounts and lenses. With such high-resolution applications, the overall vision system requires a bigger footprint and installation space within the drone design along with room for mechanical image stabilization, lens stabilizers, a gimbal or similar compensation methods.

Dr. Frederik Schönebeck says: “Basically, mapping applications in drones are advanced measurement systems using precise image processing.”

In order to save time and provide a good basis for mapping the ground, the captured images are preferably pre-processed via FPGA processors on board the drone. The developers must provide a strong processor architecture and sufficient memory capacity to store this large amount of data. The final processing is then done offline after the drone flight. Accordingly, the vision system does not require mobile data transmission. To generate higher accuracy and additional measurement data, mapping drones often couple lidar systems to their vision. These combinations and interfaces increase the complexity of designs and architectures which need to be accounted for in time and resource planning.

Application Example: Video Surveillance

Drones are the new normality in surveillance and security. They enable the observation and inspection of difficult to access terrain and extensive areas, while being inconspicuous and mobile. Video streaming is therefore one of the most common applications for drones. The unmanned flying objects must be small and have eyes in the truest sense of the word.

Important factors for drone development with video streaming in surveillance are the size, weight and cost. Typically, sensors are chosen with resolutions between 1 and 10 megapixels, with an optical format maximum of 4/3 inches or smaller. In addition to the sensor, lens mounts (M12 to 4/3 inch) and lens, the surveillance drones are usually equipped with an application-specific ISP. The drone evaluates the surveillance images in real time and can initiate follow-up actions in functional systems.

“For high quality image analysis and evaluation, especially under poor lighting conditions or in 24h operation, real-time applications in the security and surveillance sectors require sensors with a high dynamic range and high frame rates”, says Schönebeck.

In addition, in surveillance drones, sensor selection criteria that is most important are large pixels with a high full-well capacity, HDR modes and a low signal-to-noise ratio. Also, the dynamic range can be increased for higher contrast and sharper images without motion artifacts being introduced into the image. Special surveillance sensors, such as Sony’s IMX294, with its Quad-Bayer structure, outputs 2×2 pixels in normal mode so four pixels can be combined to create a “super pixel” for higher dynamic range. In HDR mode, two pixels of this quad array are integrated with a short exposure time while the other two pixels have a long exposure time (Fig. 3). This eliminates the temporal separation between the short and long exposure times, enabling HDR images of moving objects to be generated with minimal artifacts (Fig. 4).

Figure 3: Quad-Bayer pixel structure in normal and HDR mode

Figure 4: The Quad-Bayer HDR picture only shows little artifacts

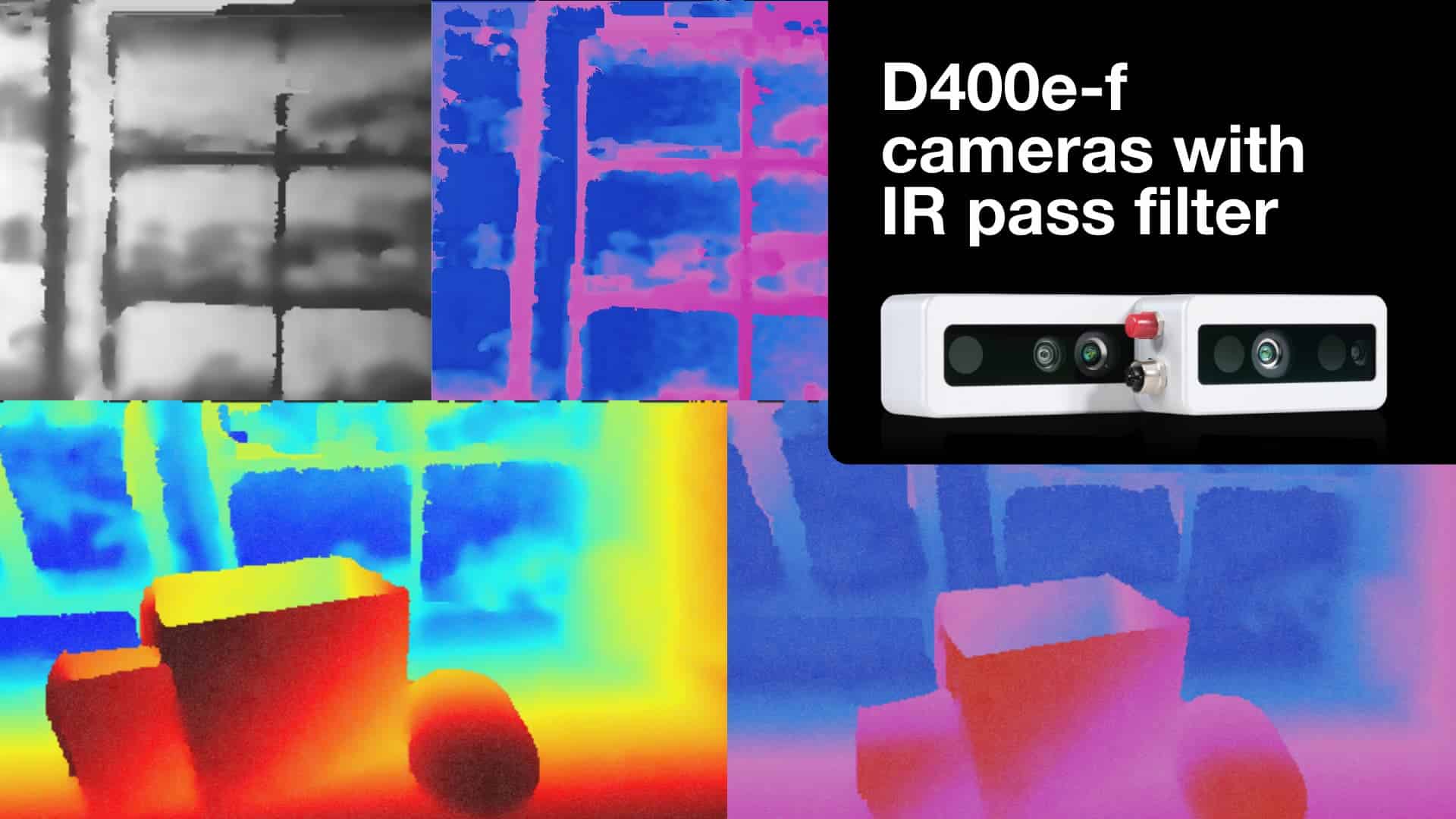

For night time monitoring, additional features, such as infrared lighting integrated into the vision system, can improve image capture and recognition. If there are many changes in direction or acceleration forces occur within the application, additional options for mechanical, optical or electronic image stabilization must be considered. If the elimination of motion artifacts is one of the main criteria in an application, a sensor with a global shutter should be selected from the start. For video encoding, Schönebeck recommends H.264 / H.265 encoding for drones. The encoding compresses the image data with minimal loss of quality. This requires less storage space on the storage medium or a lower bandwidth for real-time transmission via Wifi or 3G/4G/5G networks.

Application Example: Tracking and Identification

Functional drones use vision-based tracking to locate themselves within their environment and navigate around and through the space, based on their defined flight path. Tracking enables Follow-Me drones to follow a human being or to fly around all kinds of obstacles. In concrete terms, drones use the 2D or 3D image data of a vision system to identify their environment and objects. Based on this data, the drone can control itself, adjust its flight direction and speed, land, avoid and track objects. The vision data is partially fused with data from other sensors, such as IMU’s, for more accurate pose estimation across all six degrees of freedom in three-dimensional space. In tracking applications, image quality is less important because the image is evaluated directly by the processor and the camera works essentially as a sensor.

The camera system of a drone, which relies on tracking, is usually deeply embedded in the drone’s control system and is rather small. Sensors having a 1/3-inch format with a resolution of up to 2 megapixels combined with M12 lenses represent the maximum size requirements. They are connected to complex SoC computing architectures, consisting of CPU, FPGA and ISP, and include many interfaces to other systems on board the drone. This complexity must be taken into account when calculating the development and integration time of a new drone design.

“In order to minimize motion artifacts and maximize the precision of the tracking data, the use of global shutter sensors is recommended,” says Dr. Frederik Schönebeck. For fast and easy generation of 3D data, stereo vision cameras or systems, as well as TOF (Time of Flight) sensors can be used. The data generated by these devices area automatically evaluated by the SoC to make follow-up decisions autonomously. Since tracking drones do not focus on the quality of the captured image, they usually make extreme demands with respect to very small sizes, light weight and low costs.

How Artificial Intelligence Serves Object Recognition

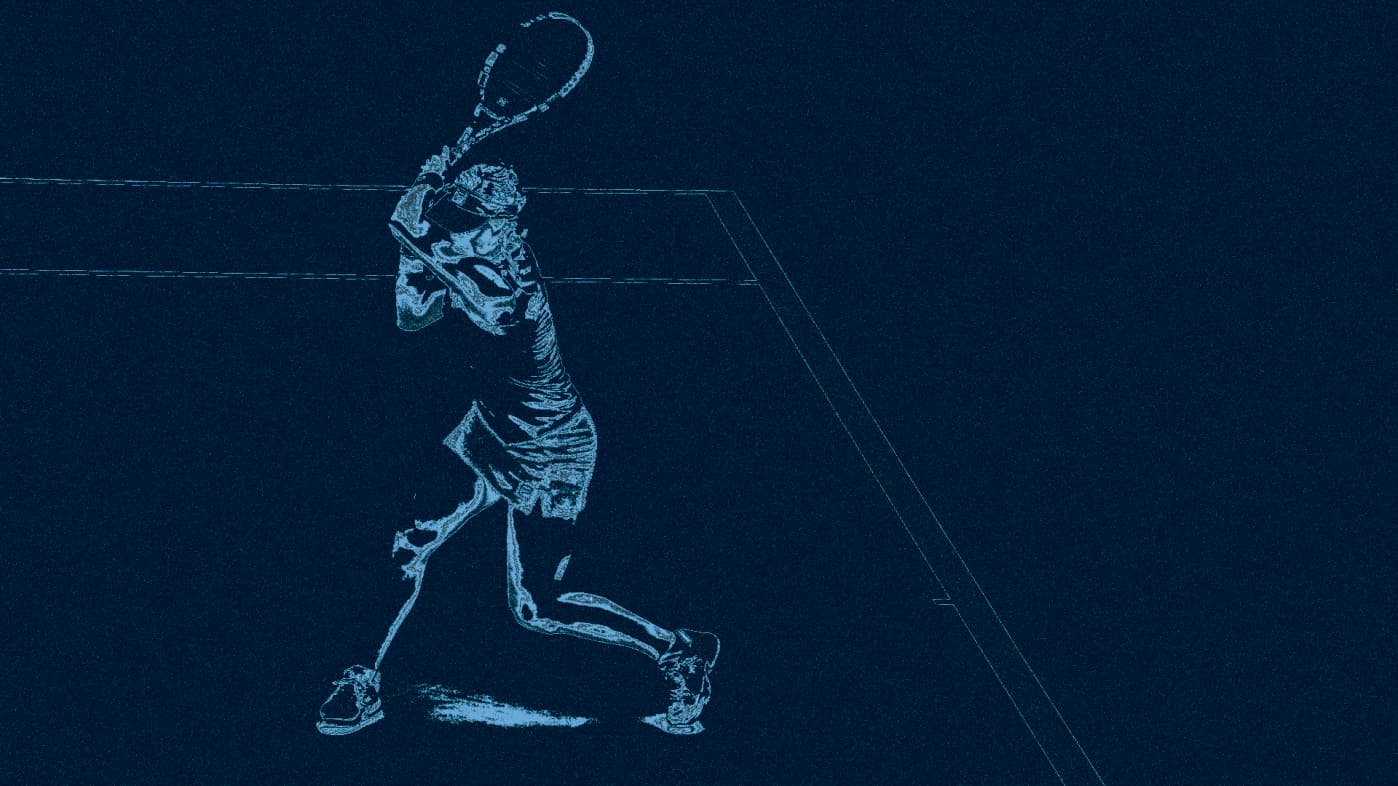

Deep learning algorithms or artificial intelligence provide more security for drones through more precise collision avoidance and/or enable autonomous tracking of objects and persons. For collision avoidance, the drone must detect obstacles such as walls, trees or other objects independently and in real time; and perform precise evasive maneuvers. During tracking tasks, the drone automatically detects the object of interest and can follow it automatically.

![]() Figure 5: Artificial intelligence helps drones to better identify objects and people

Figure 5: Artificial intelligence helps drones to better identify objects and people

For these tasks, neural networks train the software to recognize objects. At least 10,000 images, sometimes up to several million, are required to provide reliable test data for machine learning on a high-performance computer such as a GPU. The results of these computations allow object recognition algorithms to run on a small, energy-efficient processor architecture, such as an ASIC chip.

AI Supports Stereo Matching

Neural networks not only help with object recognition, but also with the actual creation of depth information in a 3D camera and lead to more precise results.

Stereo vision methods use distance determination by triangulation between two cameras to generate the 3D data. This disparity is calculated by stereo matching of two respective reference points in the left and right image. The resulting “dense disparity map” contains the third dimension as color coding. The smaller the disparity and the darker the respective point is displayed, the further away it is from the camera.

Training on neural networks uses an image, such as the left one, as a comparative data set. The right image functions as ” ground truth”. For stereo matching, this training data is optimized for the specific application in terms of distance, perspective and scenery. This allows the edges of objects to be detected more robustly and to minimize so-called “flying pixels”, i.e. outliers in the depth map. In addition, a “confidence map ” is created, which helps to estimate the quality of the depth information created and thus to make more robust decisions.

Figure 6: Neural networks help in stereo matching for exact detection of edges

Figure 6: Neural networks help in stereo matching for exact detection of edges

What Drone Developers Must Consider

Basically, it can be said: Every drone application is different and requires a uniquely and specifically optimized camera system. It is important to find the best compromise between image quality, power consumption, computing power, size and weight. In particular, the desired image quality, sensitivity to motion artifacts and the selection of the optimum sensor influence the size of the overall system. This applies in particular to drones that rely on embedded vision where their cameras are deeply integrated into the drones’ electronic design. Interfaces to other drone systems increase the complexity of the architecture adding to design and integration times for new drone developments. Powerful on-board processing is also required to control the drone via image data in real time which can eat into the power budget. Artificial intelligence such as neural networks improve the precision of autonomous drone control and object recognition allowing these algorithms to run on small ASICs. Given all these factors, a drone designer may need to trade off performance for power or space. Knowing in advance, all the factors that can influence a drone’s design will aid in ensuring the best decisions are made to minimize costs while maximizing its performance. See our article explaining whether to build or to buy a vision system.

Figure 1, 2, 3 und 4: (c) Sony Semiconductor Solutions – Figure 5: (c) Smolyanskiy et al. 2017 https://arxiv.org/abs/1705.02550